As algorithmic intelligence grows in power and scope it intervenes more and more into daily life. In order for machine – or robot – intelligence to do anything useful it needs access to enough data to reach a reasonably accurate conclusion about what it should do. The more examples it has to draw on, the more accurate it is likely to be. Automated algorithms use large datasets and neural networks to continually improve themselves by addressing the same problem repeatedly. So a computer can learn how to play an incredibly complex game like Go and end up beating the world champion player by a layering of neural networks, identifying patterns germane to the problem of winning. Quite different to the way DeepBlue beat Kasparov at chess by calculating a finite number of possibilities every second. Google, Facebook, and Amazon use this kind of machine intelligence – deep learning – on a massive scale in order to drive ever-more targeted advertising to their customers. They draw on the many millions of posts, status updates, images and metadata entities available to them. This makes them extremely valuable to anyone trying to find people to sell stuff to. The old adage of freemium web services used to be ‘if the product is free, the user is the product’ a phrase now qualified to read ‘the user is the training data‘. The data available via social media, used on a vast scale to train machine intelligences, are our thoughts, opinions, rants, and feelings. In other words, all our foibles, prejudices and failings are now fuel for machines so complex that they elude human understanding. In fact, they have been deliberately designed for self-learning, quite independent of human input in any conscious sense. That’s why a Microsoft robot let loose on Twitter was posting neo-nazi tweets within four hours. To designers interested in the future of digital systems and interfaces that implies a problematic empathy gap, one that we should perhaps start thinking about. If we want robots that respond to our better natures we need to either behave differently, or design them so that they discern and express human-like emotions. Preferably both.

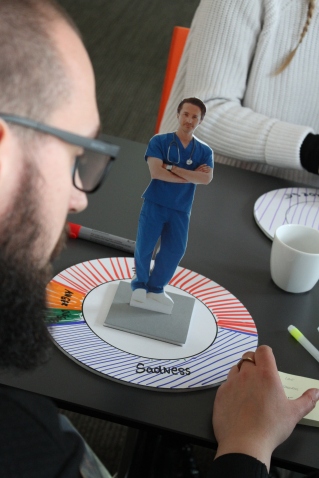

To that end I designed and ran a workshop for UAL at the British Council in Berlin on Saturday April 28 2017 on the topic of robot empathy. Participants started by deciding what proportions of a list of emotions they would give to a set of robots including a robot surgeon, robot musician, robot soldier, robot priest and robot waiter. The emotions were somewhat unexpected i.e. cowardice for the soldier, and anger for the musician but the form was designed to be both physical and visual. One outcome was a sad surgeon, upset because he was unable to heal more people more fully.

Using this method the resulting donut charts were directly connected to the robot cutouts and allowed participants to decide on a mode and coding for the emotions they wanted to portray. Unexpectedly, the group working with the robot soldier chose zero for the amount of cowardice their soldier should have but the robot priest did end up with a healthy amount of doubt.

The next step was to draw narrative sequences – comic book style – of a possible scenario in which the chosen robots would show, use or otherwise feature the proportions of their emotions displayed in the donut charts in a real world situation. The idea here was firstly, to get participants thinking less abstractly about what a robot with emotions would or could do and secondly, to get them thinking more abstractly about situations and away from the cardboard cutouts and very specific characteristics of the images. This paradoxical method seems counterintuitive, but participants seemed to have no problem encompassing the two positions simultaneously.

The risk in a public workshop setting is that participants view a drawing task as a test of their artistic and creative abilities. In this case, it was necessary to reassure people that they were not being assessed on their drawing abilities and that the exercise was exploratory, an act of inquisitive curiosity. It is often surprising to designers how difficult participants (including students) find it to acknowledge the permission to be childlike or produced unfinished low resolution prototypes. The design of the model, a comic drawing, helps in this regard since comics are associated with humour, childhood and narrative and storyboards with planning, sketching and sequence. One outcome from this exercise was a priest turned thief, and an aggressive soldier turned saviour, and a tragic waiter tired of being employee of the month consistently. Scenarios building was shown to be a way of assigning behaviours to the robot characters and to test their emotional responses in different situations.

The final part of the workshop was to design costumes for the robots and act out the scenarios in the storyboards. This meant improvising costumes from a range of materials including felt strips, paper straws, plastic foil and foam board, devising a mini-performance from the storyboard and acting it out in front of the group. The idea here was to guide participants from two dimensional representation, to narrative sequence, to fully embodied performative interpretation. There is often resistance to this step from participants as a high degree of trust is required to place oneself fully into a performance situation and trust often takes more time than a 2 hour workshop permits. In this case participants had just 30 minutes to make costumes and perform the scenarios.

The top image shows a participant having an idealised female body shape drawn on his costume to indicate how female classical musicians are expected to conform to dominant expectations of beauty. The next image shows a robot doctor altruistically helping a passer-by, applying a bandage to her leg. Following this is a scenario where a robot priest goes to confess her theft of an apple to a human priest. Finally, in the image above a robot soldier has freed captives from a hostage situation.

The purpose of the workshop was to encourage participants to reflect on the complex moral and ethical issues arising from advanced machine intelligence, the methods were a series of exploratory creative exercises moving from analysis to storytelling to performance. Materials were deliberately non-technical to allow for wide accessibility and for abstraction. Outcomes displayed a remarkable degree of diversity and demonstrated encouraging levels of engagement from participants.

You actually make it appear really easy along with your presentation however I in finding this topic to be actually one thing which I feel I’d never understand. It kind of feels too complex and extremely huge for me. I am having a look forward on your subsequent post, I will try to get the cling of it!